Voice user interfaces are a growing presence in the UX world - but what makes a good voice UI? Check out these key usability guidelines and find out.

Times are a-changing. Oh, if Bob Dylan only knew that asking a small metal box to turn on the coffee maker while you shower would be reality by 2019. It is true that voice user interface design is all but futuristic in nature – even if the concept of voice commands to digital products has been around for a while.

Voice user interface design has been a hot topic for the past few years. With the rise of Alexa and Google Assistant among others, people are growing increasingly open to this new technology. As designers, it may be time now to look more closely at voice user interfaces – and what marks a good one from a terrible one.

There are many unknowns when it comes to voice user interfaces (VUIs). As you would expect from such a young field, we are continuing to learn new things about voice UI design everyday. In the meantime, however, let’s go over what we do know – and put that knowledge to good use.

Creating a voice user interface is quite different from your regular UX design process. This is mainly because voice user interfaces are crucially different from other digital products in some key ways:

- It has an invisible interface that users can’t refer back to

- It effectively has no navigation for the user

- It’s only interaction with the user is done verbally

- It’s unfamiliar to most users who’ve grown used to graphic user interfaces

These may seem like small distinctions at first, but they have a huge impact when it comes to designing the voice user interface. Let’s go over two key aspects that follow similar lines to the classic UX process – and then, we shall dive into the world of voice UI and usability.

If you want the full overview of the basics, do check out our post on voice user experience design for mere mortals.

This product may be quite different from a classic website or app, but it still has the same goal: to solve the user’s problem. And so, before you start considering the intricate ways you can create a voice user interface, you have to do what all designers do before they get their prototyping on. You gotta carry out the research.

Your research will be the same as classic user research. You still need the target market, and a problem from which it suffers. You still need to create a product that solves this problem for users.

So what changes from graphic to voice interface design? Aside from the classics that will still play an important part in the product design, you’ll be taking notice of communication traits that unify your target users.

In this case, you’ll need to watch closely in order to grasp the language they use. And no, we don’t mean “English”. Of course, your users may predominantly speak English – but what kind of English? American? Do they tend to use the same expressions? Do they have any peculiarities about the way they talk?

It’s important for you to analyse how users verbally communicate, so your product can be ready to catch those important terms and expressions. If you want to hear more about designing for dialogue, check out our Q&A with Aviva’s Senior Experience Designer: conversational UI.

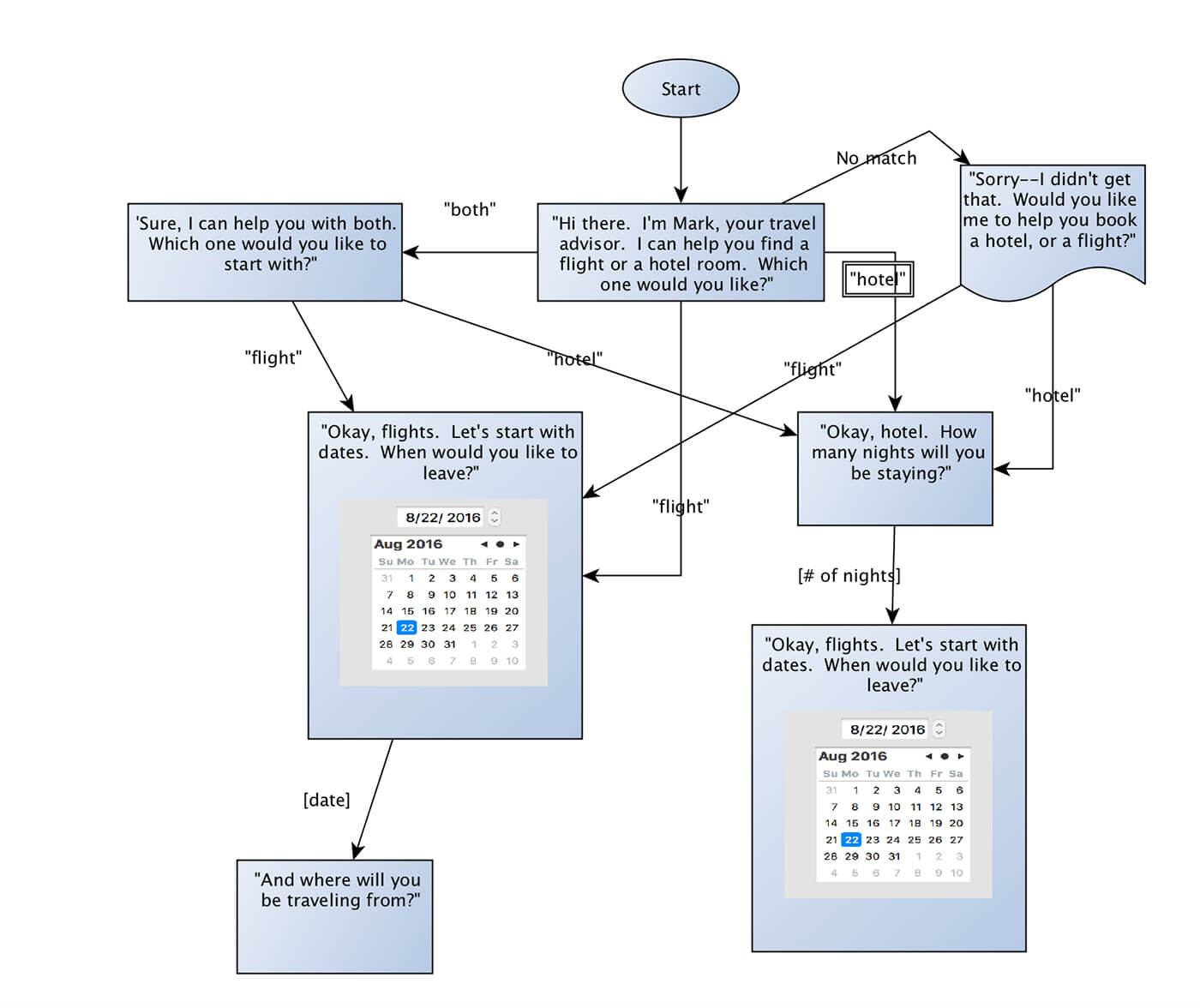

Start off by designing the navigation flow of information and dialogue. What will the user need to hear to get from point A to point B? Mapping out your user flow will help you to visualize what the user needs to do to reach their destination in the user journey.

To create your flow, start by sketching or prototyping all the possible branches a user can go down to get to their end goal in a diagram. Make sure you list all the different paths the user could take to get to the next stage in their journey and implement the dialogues you expect users will have with the VUI.

Image by Cathy Pearl

The trick here is a slight change in mentality. Classic graphic interface user flows tend to follow actions taken by the user – these will determine where the user will go and what they will experience. In voice user interfaces, however, the name of the game is context. Your user flow will be based on the context of what users say as opposed to what they do.

Cathy Pearl, VP of UX at Sensely, makes a great point in her article basic principles for designing voice user interfaces regarding the flow of VUIs. Depending on what kind of product you’re creating, you may have only a handful of branches or well over 1,000 of them. This creates a need to categorize these branches in order to avoid blind spots.

After all that thinking, analysing and planning, comes the real question: what makes a voice UI usable? What is usability for voice products and what does it mean for the designers who create them?

Luckily for us, these questions have been asked before. In fact, in their Design guidelines for hands-free speech interaction conference paper, L. Clark et al. (2018) did something all designers can get onboard with. They took a classic and put a modern spin to it:

Nielsen’s classic UI usability heuristics are something most designers are familiar with. It lays down a set of 10 rules that separate usable web design from unusable products that users won’t enjoy. L. Clark et al. (2018) managed to analyse and adapt these rules so they fit with voice UIs – and we are so glad they did.

Here is an overview of what the paper found.

The idea that users should always be aware of what is happening with the system by offering feedback. But how can that be achieved when there is no interface visible? It’s a true challenge, as users are likely to be unfamiliar with the workings of a voice user interface.

In general, designers have to find new and creative ways to account for these factors in their voice user interfaces. Some visibility issues may be as simple as adding a blinking light to the physical product, as is the case with Amazon’s Alexa.

Others may be more challenging, such as designing voice search UI patterns or letting users know the product has understood their command by offering some verbal confirmation.

Language is a complex thing. In graphic interfaces, matching the real world can be as hard or as tough as you want it to be – a point that is well made in our post on flat website design.

But when it comes to voice user interfaces, that match has to live up to a certain standard. That standard can vary from user to user, but in general you want people to be able to understand your product when it talks. Technology may not have advanced to a point where the product can carry out natural conversations with users, but not all is lost.

In broad strokes, for this match to exist in your VUI you need to narrow down what your users sound like.

For example, if your voice UI is aimed at helping surgery in hospitals you’ll want it to use technical terms so as there’s no confusion at any point in time. If your Voice UI is aimed at helping people shuffle their music while driving, it shouldn’t use technical terms at all – because in that context, it wouldn’t sound natural.

Remember that users are already unfamiliar when it comes to the conversational means of communication with interfaces, so you want to make that communication as easy as possible.

Folks behind this paper came to a fairly reasonable conclusion: since users can’t see the voice user interface, they generally feel less in control. This can be a real challenge, as users might have trouble with undoing tasks or just exerting control over the system.

Some designers created a keyword that would interrupt the interaction and basically cancel what is being done at the time, or scrapping future tasks and returning the system to sleep mode. Logically, the right choice of words and their impact on the product will largely depend on the kind of VUI and the type of user it serves.

It is true that at the moment, voice user interfaces are aimed at carrying out small and fairly simple tasks – like reciting the weather forecast or changing the music. But even with small tasks, you may want to consider including a stop command so that users can put an end to a task if they feel it’s no longer relevant or needed.

This is quite an interesting aspect of UX design when it comes to voice user interfaces. As the folks behind this paper came to realize, there is no precise concept of consistency when it comes to VUIs. As a young field that is still developing and taking shape, we just don’t know what consistency in these products would entail.

As the designer orchestrating the system, the best you can do is to invest time and effort into the vocabulary of the system. Make sure you have all the important terms down, and that the interface doesn’t deviate into other words that users may not be familiar with.

The paper found that designers do have the obligation to prevent errors through the design. However, Meyers et al. (2018) found an interesting counterpoint: in voice UIs, errors didn’t do much to impair the interaction.

There are many reasons why that is, but it is true that in communication saying something twice isn’t as much work as doing anything twice with your hands – such as filling out a sign in form. While filling out that form for a second time due to an error would be very annoying to users, saying the information twice due to error wouldn’t have the same negative effect.

The idea that users shouldn’t have to remember information from previous parts of the product in order to use it. That is applicable to voice user interfaces, mainly due to the fact that the product would have to say the information, leaving the user to remember it on their own.

Remembering information once it’s been said is difficult for most people, and so your product shouldn’t ever drop too much on users – that would increase the chance for miscommunication or misguided decision making. Let’s go over a silly example of how that would work.

The user is at home, wishing to go to eat. The user asks the product where the closest restaurant is. Now, the answer would likely include several restaurants that are near the user – but just listing out options would confuse the user or simply direct them towards a random place.

Instead, aim to have the product ask what kind of cuisine they would prefer, or if they wish to hear about places within walking distance.

Another interesting aspect of voice user interface design. In graphic interfaces, users will often enjoy keyboard shortcuts that increase productivity. But how to reflect that in voice commands? Turns out, you may not have to.

Begany et al. (2016) found that speaking not only comes naturally (no need to learn shortcuts), but is also inherently more efficient than keyboard typing. When users can simply say their command, there is no need to look around the UI for what they want.

While the very nature of voice UIs may be more efficient, there is still the matter of tailoring the experience. Nielsen recommends allowing users to tailor the experience of frequent actions, giving the product flexibility to adapt to the user. In voice products, that may also be achieved by simply allowing the user to tailor the entire experience at the setup stage.

Allow them to customize the product as much as you want, such as specifying what the product should use when referring to the user – do they prefer their first name? Perhaps an entertaining nickname? These are small things that have quite an impact on the overall experience.

While designers may not need to overthink the “aesthetics” of their voice user interface, there is something to be said about minimalism in dialogue. By now, we’ve established you want the product to offer information in small quantities – but how much information is too much?

Finding the right balance between what the user needs and what the user can handle is tricky. Ideally, you don’t want the product to say anything needlessly. Designers should see this usability heuristic as an invitation to rethink their dialogue. After all, minimalism is all about letting the unnecessary go.

The paper makes an interesting point on feedback. It is true that for voice UIs, offering feedback is necessary in order to keep communication on track. With that said, users also want to experience natural dialogue – similar to talking to another person on the phone.

If the person on the other side of the line kept saying “heard” or “got that” after every sentence, that phone call wouldn’t be enjoyable at all.

To illustrate: In 2018, Amazon’s Alexa got a new feature called the “brief mode” in which it emits a deep sound as opposed to “okay” to confirm it understood the command.

The paper found that while users want feedback, they don’t want a mechanic voice that grows repetitive after the first use. Instead, they prefer implicit confirmations that are woven into the conversation itself. While more challenging to design, the final experience will definitely make all that extra work worth it.

Undoing something can be difficult when it comes to voice user interfaces. The paper found that among the key challenges designers face when creating VUIs is that users feel frustrated when they are misunderstood by the system. That can be tough, as misunderstandings can lead to errors.

And that is the other side of the coin: errors. Error messages are important no matter in what kind of interface – Nielsen wasn’t wrong to claim error messages shouldn’t just say there is something wrong. They must say what is wrong and how the user could correct the situation.

When it comes to voice UIs, you want the error message to be brief and to the point. You want it to be in an appropriate tone, so that it fits with the context. For example, an error message might include a sentence such as “I don’t understand” or “please repeat”. It should also include specifications, such as “please speak louder” or “Please specify what type of XXX you mean”.

Getting users to fully understand the product can be difficult. The paper found that offering documentation, in the classic sense, might fall flat with users who are after the hands-free experience of VUIs. However, it also found that users responded really well to interactive tutorials prior to using the product for the first time.

This works well to get users more familiar with the capabilities and functions of the voice product, since they can’t explore the product via navigating the interface. The paper also found that users benefited even further by having help available to them throughout the interactions. This works so that users have the option to accept this help should they need it.

For example, imagine you are using a voice assistant in your smart home. You instruct the assistant to turn on the alarm system – but you’ve forgotten to lock the back door.

At this point, the system would offer you help in locking the house by saying something along the lines of “it appears the kitchen door is left unlocked. Would you like it to be locked?” before doing just that for you.

Right. Now that we’ve gone over the basics of usability in voice user interfaces, let’s check some top examples. These products are groundbreaking in nature, and are pushing the limits of what virtual assistants or artificial intelligence are available to the general public.

An interesting fact: Loop Ventures carried out a study that aimed to compare Siri, Alexa, Google Assistant and Cortana. Each VUI was asked 800 questions, with the criteria being if it understood the question correctly and rather it answered it accordingly.

The result? Google Assistant was the grand winner, understanding all of the questions and answering 86% of them correctly.

Amazon’s virtual assistant has grown into a sector giant since its initial release in 2014. According to Statista, Alexa accounted for 62% of the global intelligent assistant market in 2017. Reasons why Alexa has enjoyed so much popularity are arguable, but it does have some key strategic traits that are sure to contribute to its success.

For starters, Alexa comes with all sorts of integrations and additional functionalities. Classic functions such as setting an alarm are already built-in – but many others are available should users want them. In a smart move, Amazon made Alexa an open platform where anyone can design a new “skill” – which encouraged large waves of new skills that just keep coming.

This makes Alexa a very versatile assistant, given it can be entirely customized to each individual user’s needs. It’s quite a smart way to make the voice user interface flexible – one that hasn’t been matched by Siri, Cortana or any other virtual assistant in the market.

We also love the fact that Alexa can be purchased via different devices that offer users a range in pricing, from Echo Dot ($49.99) to Echo Show ($229.99). In fact, users can even connect other devices to Alexa even if they aren’t made by Amazon, such as connecting other speakers for better audio quality.

Google’s own virtual assistant may not enjoy a domineering market share like Alexa, but it is impressive in its own way. For example, you can not only set up the voice user interface to recognize and respond to 6 different voices, but also customize different responses for each user.

An interesting trait of Google’s Assistant is that users can customize the voice from the product. Other VUIs are reluctant to give users the power to change the product’s voice entirely, as that would fragment the personality of the product itself.

Google, however, has no concerns over its brand or brand image being already known to the majority of people in the world. And so, it gives users complete power over the product’s voice.

While Google does go to great lengths to offer a customizable experience to users, its pre-established functionalities are all users can hope for. While Google does roll out frequent updates that bring new features, users can’t expect the sheer amount of available functionalities that Alexa enjoys.

Like Alexa, Google’s Assistant can be purchased with several products. They include Google Home Mini ($29), Google Home ($79), Home Hub ($109.99) all the way to Home Max ($399).

Siri was the first true voice user interface product available to the general public. While the voice user interface has been around for a long time now, it’s recently been under the public spotlight for all the wrong reasons. Due to a news article published by the Guardian in July 2019, Apple saw its virtual assistant division in hot water.

The issue was that Apple had been using third-party contractors to analyse and listen to interactions from real users with Siri. While that does raise some privacy concerns, the whole thing was made worse by the fact that Siri can be activated by many sounds other than the classic “Hey Siri”.

This lead to workers hearing all sorts of personal conversations, from medical information, sexual encounters and even criminal activity.

Apple, true to the market leader it is, responded with a swift change in Siri. Now, Siri is one of the only voice user interfaces in the market that does not record interactions by default. Users can also delete all their recordings should they desire to.

It is curious that Apple would manage to take a crisis and turn it into a real upside of their product – now, users can use Siri and not have themselves recorded at all, while Alexa users don’t have that same option.

Cortana has gone through some very rocky waters lately. Microsoft had first imagined its voice user interface to be a constant presence in its devices, in a similar fashion to Apple’s Siri. Cortana was once a big factor in Windows 10 – but even that seems to be no longer the case. Microsoft is quickly changing tactics, turning Cortana into an app instead.

Cortana didn’t shape up like Microsoft had hoped, but there is still some hope for it left. Microsoft shocked the market when it admitted that Cortana was no longer a competitor against Alexa or Google Assistant – but rather, an add on to both of them.

Microsoft has already managed to secure a Cortana-Alexa integration, in which you can access Cortana via Alexa with a simple “Alexa, open Cortana”. The idea is that both assistants would serve the user in different types of tasks throughout the day – coming together to add value.

This initiative is still in its early steps, so we are likely to hear a lot more details about how this union will work soon.

Seeing all the new developments in the VUI field, it’s only natural that many UX designers would explore this new and exciting front. And while we are avid readers here at Justinmind, we understand that sometimes you need an actual class to truly learn – especially something as complex as this subject.

It is worth mentioning that most of these courses are connected in some way to the only open-source platform for voice design as of now – Amazon.

Alexa is being used as the prime focus of students everywhere trying to get more familiar with VUIs. While it is true that Amazon benefits from an increasingly large number of Alexa Skills available to consumers, students walk away with precious insight. A win-win!

This course is the perfect quick introduction to Alexa, and voice user interface in general. Offered by Amazon itself, this course takes only 1 hour and is completely free of charge. The course isn’t meant to be a thorough or complete education on VUIs, but rather a brief introduction to the world of voice interface.

The course itself runs around the process Amazon used for the “Foodie” skill for Alexa. It asks students to analyse interactions and to go through a series of exercises meant to help students grasp how complex human conversation truly is.

Among things included in this course, students can expect to study dialog management, context switching, memory and persistence along with other traits of human communication.

- This course can be completed within 1 hour

- Price: free of charge

This course aims to not just introduce designers to the art of voice user interface design but to also set them up as professionals right upon completion. Voice User Interface Design by CareerFoundry is in close collaboration with Amazon, which opens the door to some exciting opportunities.

For example, students will learn more than the basic concepts that form the base for VUI design. In this course, students will actually have created 3 Alexa skills from scratch. Students can expect learning everything from creating user personas for VUI to working with AWS Lambda.

- The course takes approximately 120 hours to complete at your own pace

- Price: €1615 with other payment options available

Not everyone can truly pick up a new skill without a teacher, and face-to-face learning. Offered in My UX Academy in London, this voice user interface design course might be the perfect choice for students after a more personal touch. It is also a collaboration with Amazon, with Alexa on the forefront of the syllabus.

Students can expect to learn all the basics from theories and conceptualization, as well as the uses and cases for voice products. Even better: students will also learn to prototype voice products, from low to high fidelity – followed by usability testing!

All students are required to create an Alexa skill from scratch, and go all the way to the finish line of the product development process. Not just designing and creating the skill, but also having it certified by Amazon, accounting for discoverability, monetization… and writing a case study about it all!

- Classes are 6pm to 8.30pm for 6 weeks

- Classes have a maximum of 12 people

- Price: £950

Unlike the previous courses, this voice user interface design class is not aimed at beginners. Offered on Udemy, this course is a viable option for students with working knowledge of JavaScript and UX design in general.

This online course is all about covering your bases. Students will go through a brief review of Alexa and voice user interfaces as a whole before entering more complex topics. There is a whole section of the course dedicated to JavaScript, including a basic overview of the language – from statements and data types to loops and Nodejs.

Students will learn the process of creating not one, not two – but five Alexa skills. The skills students will work on vary in nature, so you can experience different sides of voice user interface design.

- This course is self-paced, with about 4 hours of recorded video

- Price: €149.99

Voice user interface design is truly futuristic. There is still so much left for us to discover when it comes to the potential of this field, and all that it can truly grow to be. Just like UX design was taking shape and evolving on to new things, so too is VUIs.

Designers who are willing to take the step into the world of voice interfaces sure do have exciting things ahead of them. Rather it be studying up on Alexa skills or adding on to Google’s Assistant, designers everywhere will be watching the sector’s development with close attention.

PROTOTYPE · COMMUNICATE · VALIDATE

ALL-IN-ONE PROTOTYPING TOOL FOR VOICE INTERFACES

Related Content

There really is no faster way to get started than using a pre-designed web app template that you can tailor to your taste.6 min Read

There really is no faster way to get started than using a pre-designed web app template that you can tailor to your taste.6 min Read Looking for a quick start in a new project? Discover these 37 free practical app templates made by the Justinmind team just for you.13 min Read

Looking for a quick start in a new project? Discover these 37 free practical app templates made by the Justinmind team just for you.13 min Read Looking for a quick start in a new project? Explore these 30 practical 100% Free website app templates designed by the Justinmind team just for you.14 min Read

Looking for a quick start in a new project? Explore these 30 practical 100% Free website app templates designed by the Justinmind team just for you.14 min Read